WINNING A HACKATHON

ChallengeDecision Tree EnsemblesLessons Learned

CONTEXT AND OBJECTIVE

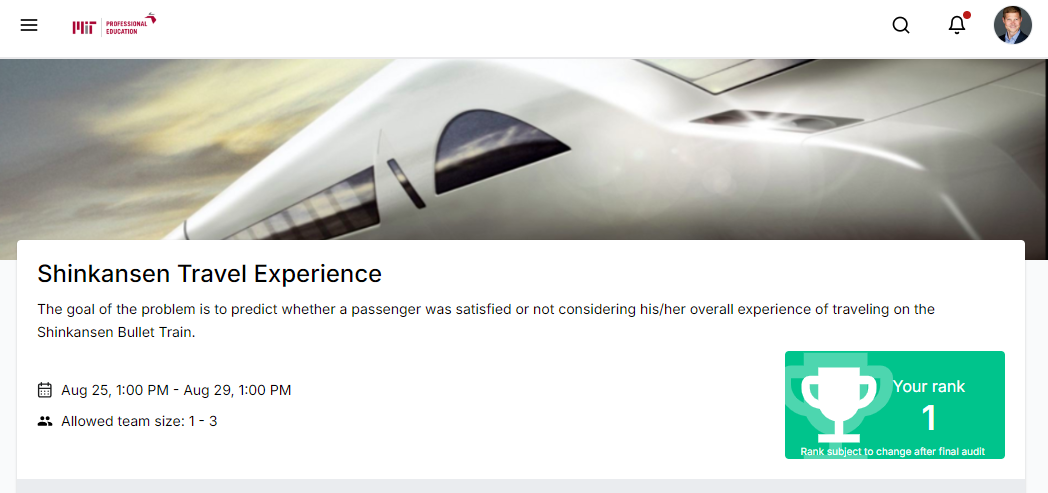

In the context of the MIT Applied Data Science Program, a hackathon was organized during the last week, just before the Completion ceremony. This was an opportunity to connect with and compete alongside skilled fellow students, apply what was learned, and simply have fun! From a cohort of 400 students, about 80 enrolled in the hackathon, myslef included. I ended up winning the competition.

While I am not allowed to share the details of the Data Science case we had to work on, suffice to say that the goal was to solve a binary classification problem (overall satisfaction of Shinkansen travelers), with a tabular dataset consisting mostly in categorical features.

WHAT WAS DONE

I started the competition with a plan in mind:

- Do a quick first iteration, from end-to-end, to get a good overview of the problem.

- Do an EDA, improve data preprocessing, pick a good model and fine-tune it.

- Explore additional ideas to improve the solution, and hopefully gain a few positions on the leaderboard.

Steps 1 and 2 got me to 3rd place, and step 3 to 1st place.